Summarization with GPT-J

import nlpcloud

client = nlpcloud.Client("gpt-j", "your_token", gpu=True)

generation = client.generation("""[Original]: America has changed dramatically during recent years. Not only has the number of graduates in traditional engineering disciplines such as mechanical, civil, electrical, chemical, and aeronautical engineering declined, but in most of the premier American universities engineering curricula now concentrate on and encourage largely the study of engineering science. As a result, there are declining offerings in engineering subjects dealing with infrastructure, the environment, and related issues, and greater concentration on high technology subjects, largely supporting increasingly complex scientific developments. While the latter is important, it should not be at the expense of more traditional engineering.

Rapidly developing economies such as China and India, as well as other industrial countries in Europe and Asia, continue to encourage and advance the teaching of engineering. Both China and India, respectively, graduate six and eight times as many traditional engineers as does the United States. Other industrial countries at minimum maintain their output, while America suffers an increasingly serious decline in the number of engineering graduates and a lack of well-educated engineers.

(Source: Excerpted from Frankel, E.G. (2008, May/June) Change in education: The cost of sacrificing fundamentals. MIT Faculty

[Summary]: MIT Professor Emeritus Ernst G. Frankel (2008) has called for a return to a course of study that emphasizes the traditional skills of engineering, noting that the number of American engineering graduates with these skills has fallen sharply when compared to the number coming from other countries.

###

[Original]: So how do you go about identifying your strengths and weaknesses, and analyzing the opportunities and threats that flow from them? SWOT Analysis is a useful technique that helps you to do this.

What makes SWOT especially powerful is that, with a little thought, it can help you to uncover opportunities that you would not otherwise have spotted. And by understanding your weaknesses, you can manage and eliminate threats that might otherwise hurt your ability to move forward in your role.

If you look at yourself using the SWOT framework, you can start to separate yourself from your peers, and further develop the specialized talents and abilities that you need in order to advance your career and to help you achieve your personal goals.

[Summary]: SWOT Analysis is a technique that helps you identify strengths, weakness, opportunities, and threats. Understanding and managing these factors helps you to develop the abilities you need to achieve your goals and progress in your career.

###

[Original]: Jupiter is the fifth planet from the Sun and the largest in the Solar System. It is a gas giant with a mass one-thousandth that of the Sun, but two-and-a-half times that of all the other planets in the Solar System combined. Jupiter is one of the brightest objects visible to the naked eye in the night sky, and has been known to ancient civilizations since before recorded history. It is named after the Roman god Jupiter.[19] When viewed from Earth, Jupiter can be bright enough for its reflected light to cast visible shadows,[20] and is on average the third-brightest natural object in the night sky after the Moon and Venus.

Jupiter is primarily composed of hydrogen with a quarter of its mass being helium, though helium comprises only about a tenth of the number of molecules. It may also have a rocky core of heavier elements,[21] but like the other giant planets, Jupiter lacks a well-defined solid surface. Because of its rapid rotation, the planet's shape is that of an oblate spheroid (it has a slight but noticeable bulge around the equator).

[Summary]: Jupiter is the largest planet in the solar system. It is a gas giant, and is the fifth planet from the sun.

###

[Original]: For all its whizz-bang caper-gone-wrong energy, and for all its subsequent emotional troughs, this week’s Succession finale might have been the most important in its entire run. Because, unless I am very much wrong, Succession – a show about people trying to forcefully mount a succession – just had its succession. And now everything has to change.

The episode ended with Logan Roy defying his children by selling Waystar Royco to idiosyncratic Swedish tech bro Lukas Matsson. It’s an unexpected twist, like if King Lear contained a weird new beat where Lear hands the British crown to Jack Dorsey for a laugh, but it sets up a bold new future for the show. What will happen in season four? Here are some theories.

Season three of Succession picked up seconds after season two ended. It was a smart move, showing the immediate swirl of confusion that followed Kendall Roy’s decision to undo his father, and something similar could happen here. This week’s episode ended with three of the Roy siblings heartbroken and angry at their father’s grand betrayal. Perhaps season four could pick up at that precise moment, and show their efforts to reorganise their rebellion against him. This is something that Succession undoubtedly does very well – for the most part, its greatest moments have been those heart-thumping scenes where Kendall scraps for support to unseat his dad – and Jesse Armstrong has more than enough dramatic clout to centre the entire season around the battle to stop the Matsson deal dead in its tracks.

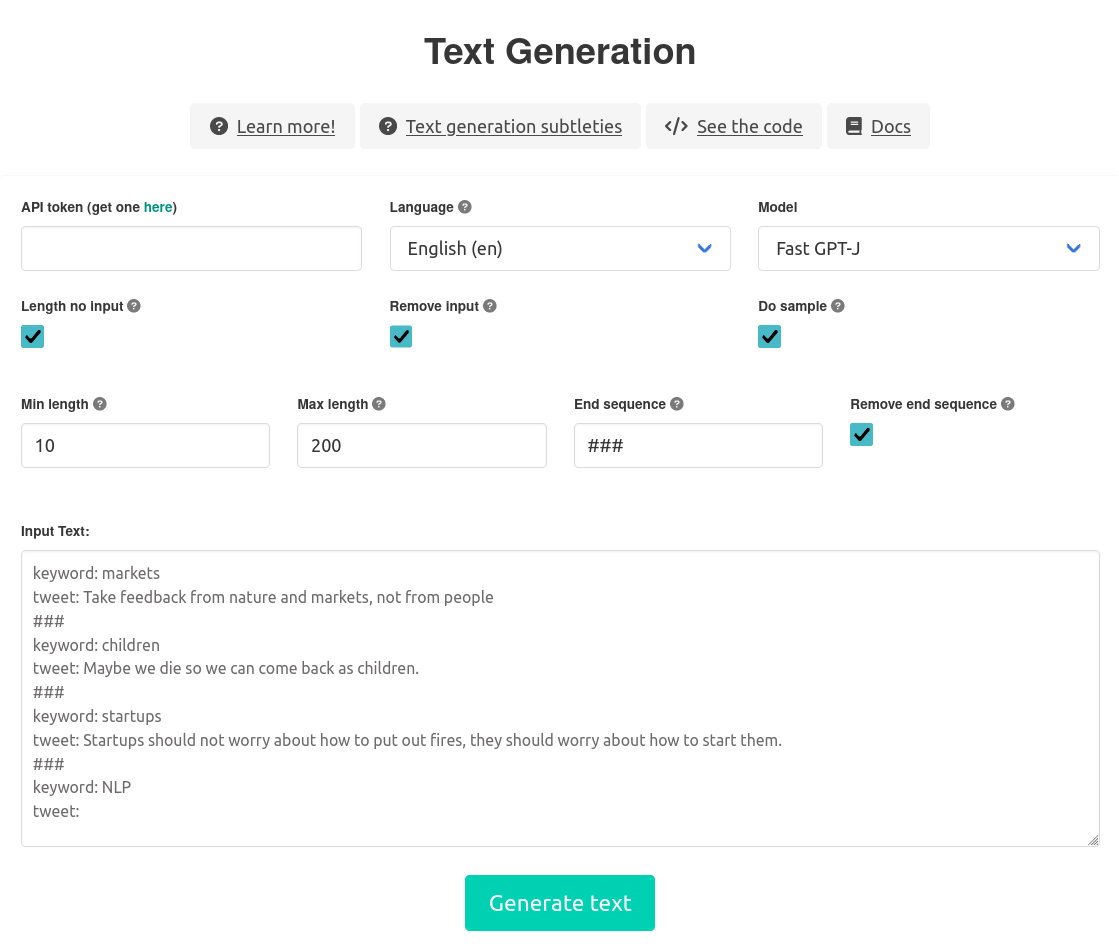

[Summary]:""",

length_no_input=True,

end_sequence="###",

remove_end_sequence=True,

remove_input=True,

min_length=20,

max_length=200)

print(generation["generated_text"])

Output:

Season 3 of Succession ended with Logan Roy trying to sell his company to Lukas Matsson.

Text summarization is a tricky task. GPT-J is very good at this, as long as you give it the right

examples.

The size of the of the summary, and the tone of the summary, depend very much on the examples you

created. For example, you might not create the same type of examples, whether you are trying to make a

simple summary for kids, or an advanced medical summary for doctors.

If the input size of GPT-J is too small for your summarization examples, you might want to fine-tune GPT-J for your summarization task.