What is Tokenization?

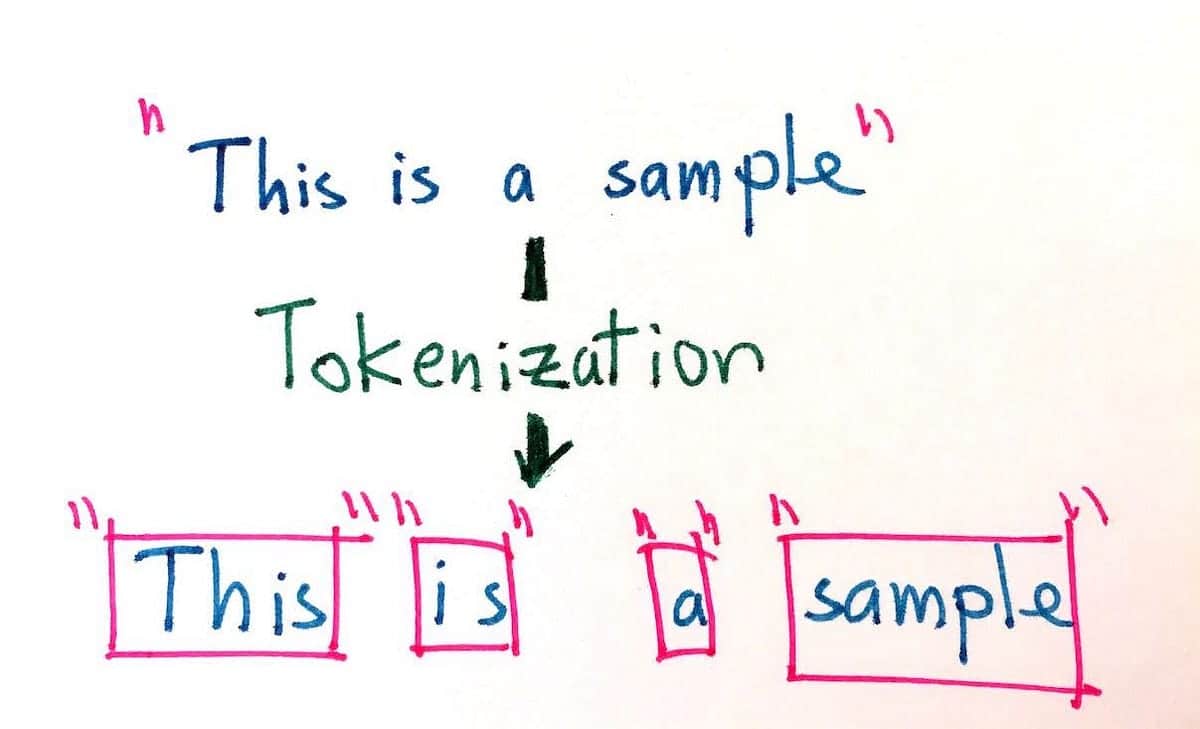

Tokenization is about splitting a text into smaller entities called tokens. Tokens are different things depending on the type of tokenizer you're using. A token can either be a word, a character, or a sub-word (for example, in the English word "higher", there are 2 subwords: "high" and "er"). Punctuation like "!", ".", and ";", can be tokens too.

Tokenization is a fundamental step in every Natural Language Processing operation. Given the various existing language structures, tokenization is different in every language.

What is Lemmatization?

Lemmatization is about extracting the basic form of a word (typically the kind of work you could find in a dictionnary). For example, the lemma of "apple" would still be "apple" but the lemma of "is" would be "be".

Lemmatization, like tokenization, is a fundamental step in every Natural Language Processing operation. Given the various existing language structures, lemmatization is different in every language.