LLaMA 2 is a powerful Natural Language Processing model

What is Generative AI?

Generative AI is a fancy word designing text generation models. These models take a piece of text as an input and generate the rest of the text for you, in the spirit of your initial input. It is up to you to decide how large you want the generated text to be, and how much context you want to pass to the model in your input..

Let's say you have the following piece of text:

Now, let's say you want to generate about 250 words from the above text. Simply send your text to the model and it will generate the rest:

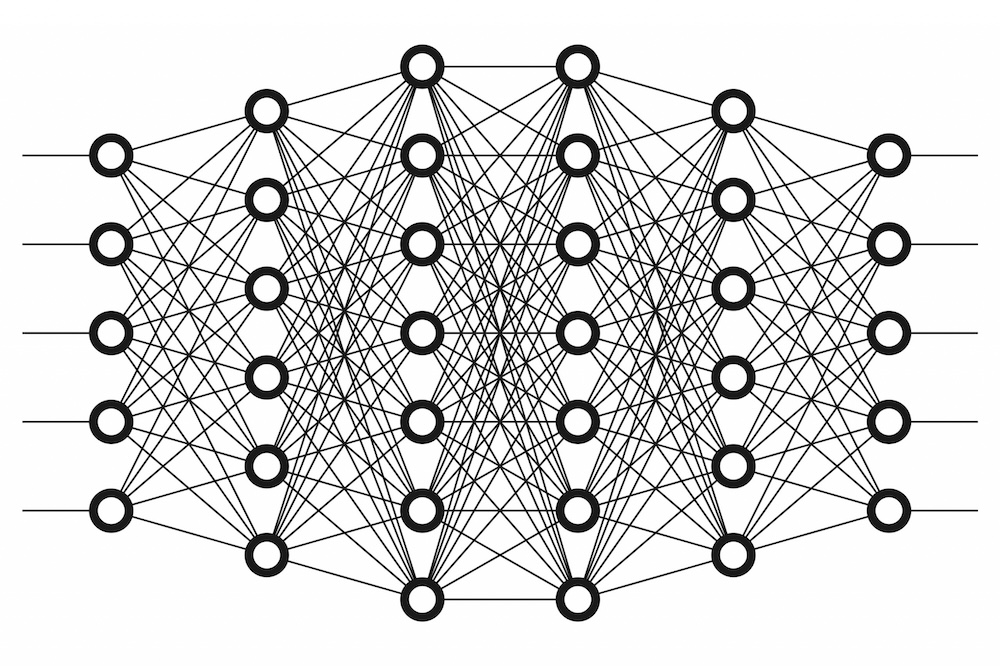

LLaMA 2 is a powerful Natural Language Processing model that does what every model should do: predict your future behavior. It's very effective at detecting patterns in your real-time input, predicting upcoming events and responses that you may not even consider. LLaMA 2's power consists of a set of internal neural processes that are extremely accurate. But at the same time that results are good, they need to be fast. So LLaMA 2 is designed to run on the server. To speed up your results, you may need to use the cloud. Here's why: you may be storing your business data in the cloud. And using the cloud may mean that you can't get your data into the Natural Language Processing process because you have to transfer everything over the cloud.

Foundational generative models usually require some "prompt engineering" in order to understand what you expect from them. You can read more about prompt engineering in our dedicated article about few-shot learning: here.

Once fine-tuned on specific use cases, these generative models can give even more impressive results. Most modern generative models are actually fine-tuned to understand human instructions without requiring any prompt engineering (also known as "instruct" models). You can read more about how to use such instruct models in our dedicated guide: here.

You can achieve any AI use case thanks to generative models, as long as you use and advanced and versatile model: sentiment analysis, grammar and spelling correction, question answering, code generation, machine translation, intent classification, paraphrasing... and much more!